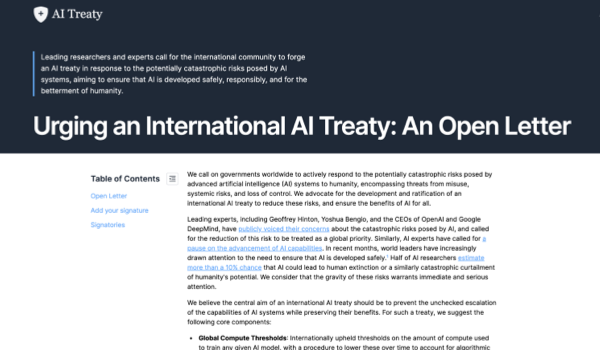

LONDON - On the eve of the first global summit dedicated to AI safety, top experts, including Turing Award winner and godfather of AI Yoshua Bengio, the leading expert in AI safety and governance in China who recently briefed the UN Security Council on AI risks Yi Zeng, former President of the AAAI Bart Selman, renowned cognitive scientist Gary Marcus, Senior Research Scientist at Google DeepMind and co-founder of the Future of Life Institute Victoria Krakovna, and a member of the board of leading AI company Anthropic Luke Muehlhauser have all signed an open letter that calls for:

The development and ratification of an AI safety treaty that achieves widespread agreement across the international community, setting up a working group at the UK AI Safety Summit with broad international support to develop a blueprint for this.

Such an AI safety treaty should include several core components, including:

- Global compute thresholds: Globally established limits on the amount of computation that can be used to train any given model.

- A CERN for AI safety: A grand-scale collaborative effort to pool resources, expertise, and knowledge in the service of AI safety.

- A compliance commission: Responsible for monitoring treaty compliance, a role similar to that of the International Atomic Energy Agency (IAEA).

The aims of such a treaty being:

- To mitigate the catastrophic risks posed by AI systems to humanity, by preventing the unchecked escalation of AI capabilities and surging resources and expertise into safety research.

- To ensure the benefits of AI for all.

This is a historic coalition of experts w

The content herein is subject to copyright by The Yuan. All rights reserved. The content of the services is owned or licensed to The Yuan. Such content from The Yuan may be shared and reprinted but must clearly identify The Yuan as its original source. Content from a third-party copyright holder identified in the copyright notice contained in such third party’s content appearing in The Yuan must likewise be clearly labeled as such. Continue with Linkedin

Continue with Linkedin

Continue with Google

Continue with Google

610 views

610 views