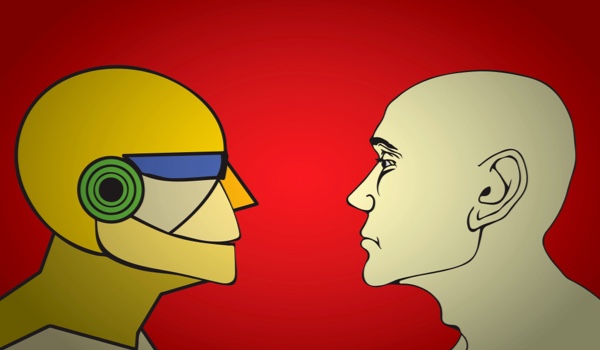

NEW YORK - Recently, Google engineer Blake Lemoine was put on leave after claiming the company’s chatbot LaMDA had shown signs of being sentient. The engineer based his affirmations on the conversation he had had with the chatbot, and particularly the fact that LaMDA expressed its fear of being turned off, an action that the chatbot likened to death.

The accuracy of this claim has been rapidly contested, based on the simple fact that the machine’s responses to language are based on mining vast amounts of text, and so surely it had the opportunity to ‘read’ science fiction where one can easily stumble upon sentient machines with such types of answers. More precisely, LaMDA did perfectly what it had been trained to do as a machine, that is, picking the most likely stream of data that matched the conversation. This is similar to the notorious experiment known as the ‘Chinese chamber,’ in which someone following a set of predetermined rules for answering in writing questions in the Chinese language may trick the person asking the questions into believing that they speak Chinese.

However, the fact that one may believe that machines are sentient is, I would argue, more important than if they actually are or not. The real-life consequences of this belief are mixed, and so one must tread carefully.

Before considering the question of sentient artificial intelligence (AI), one should take a look at Emotion AI. This is a type of AI that can identify and interpret the signs of one’s emotional state. If designed to do so, it could also answer in ways that the team of designers deems appropriate for the situation. Therefore, there are two overarching manners in which Emotion AI can be used: on the one hand it is presumably for informing third parties about the emotional state of a person, and on the other hand it could act as an emotionally aware interlocutor in a specific interaction setting. Both could have an important impact o

The content herein is subject to copyright by The Yuan. All rights reserved. The content of the services is owned or licensed to The Yuan. Such content from The Yuan may be shared and reprinted but must clearly identify The Yuan as its original source. Content from a third-party copyright holder identified in the copyright notice contained in such third party’s content appearing in The Yuan must likewise be clearly labeled as such. Continue with Linkedin

Continue with Linkedin

Continue with Google

Continue with Google

1274 views

1274 views