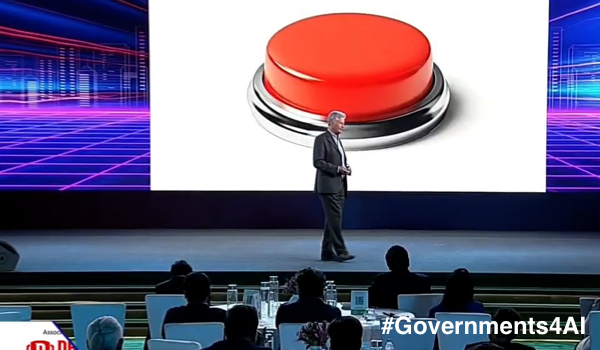

LONDON - Imagine that you and I are in my laboratory, and I show you a big red button. I tell you that if I press this button, then you and all your family and friends - in fact the entire human race - will live very long lives of great prosperity and in great health. Furthermore, the environment will improve and levels of inequality will fall, both in your country and around the world.

Of course, I add, there is a catch. If I press the button, there is also a chance that the entire human race will go extinct. I cannot tell you the exact probability of this happening, but I estimate it to be somewhere between 2-25 percent within five to 10 years.

In this hypothetical situation, would you want me to press the button, or would you urge me not to?

I have posed this question several times while giving keynote talks around the world, and the result is always the same. A few brave souls raise their hands to say yes, while the majority of the audience laughs nervously and gradually raises their hands to say no. Additionally, a surprising number of people seem to have no opinion either way - possibly because this third group does not think the question is serious.

It is serious. If the world continues to develop advanced artificial intelligence (AI) at anything like the current rate then - in several decades, or perhaps sooner - someone will develop the world’s first superintelligence. In this context, superintelligence refers to a machine that exceeds human capability in all cognitive tasks. The intelligence of machines can be improved and ours cannot, so it will go on, probably quite quickly, to become much, much more intelligent than us.

Some people think the advent of superintelligence on this planet inevitably means that humans will quickly go extinct. I don’t agree with this, but extinction is a possible outcome that I think we should take seriously.

Given this backdrop, why is there n

The content herein is subject to copyright by The Yuan. All rights reserved. The content of the services is owned or licensed to The Yuan. Such content from The Yuan may be shared and reprinted but must clearly identify The Yuan as its original source. Content from a third-party copyright holder identified in the copyright notice contained in such third party’s content appearing in The Yuan must likewise be clearly labeled as such. Continue with Linkedin

Continue with Linkedin

Continue with Google

Continue with Google

1091 views

1091 views