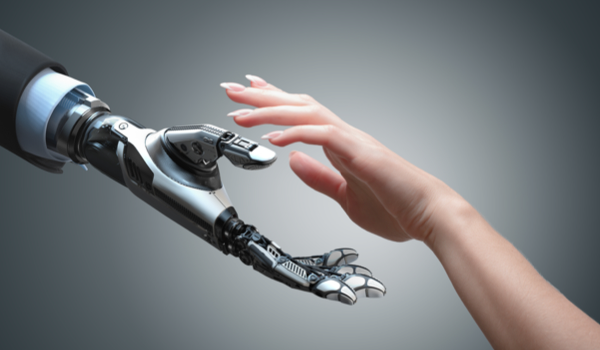

BERLIN - Deep learning and algorithms are the foundation of decisions that affect individual fates or entire groups. Intelligent assistants calculate the suitability of applicants, analyze the most efficient route or obstacles for self-driving cars, and identify cancer on X-rays. Data is the blood in the veins of such machines: It is the basis for self-learning systems and the ultimate template for all subsequent calculations and recommendations.

This fact becomes a challenge with the advance of machine learning and artificial intelligence (AI). Because data is generated and processed by humans they cannot be perfect. Data sets reflect our own biases and pervasive prejudices. If an intelligent system works on the basis of such a data set, the result is often discrimination.

Almost all large tech companies that work with AI have already encountered the problem. In October 2018, Amazon hit the headlines as a sexist AI recruiting tool showing bias while sorting applications that contained the words ‘women’ or ‘women’s college,’ while in 2015, a Google algorithm identified people with dark skin as ‘gorillas.’

Human Factor - Cause and Solution

Organizations such as the AJL and AI Now are actively committed to combating algorithmic bias. The first proposed solution is a call to diversify the sector, which to this day mainly employs white, male professionals.

“The [AJL] mission is to raise awareness about the impacts of AI, equip advocates with empirical research, build the voice and choice of the most impacted communities, and galvanize r

The content herein is subject to copyright by The Yuan. All rights reserved. The content of the services is owned or licensed to The Yuan. Such content from The Yuan may be shared and reprinted but must clearly identify The Yuan as its original source. Content from a third-party copyright holder identified in the copyright notice contained in such third party’s content appearing in The Yuan must likewise be clearly labeled as such. Continue with Linkedin

Continue with Linkedin

Continue with Google

Continue with Google

5279 views

5279 views