THE RANDSTAD, NETHERLANDS -

An extended version of this article first appeared here.

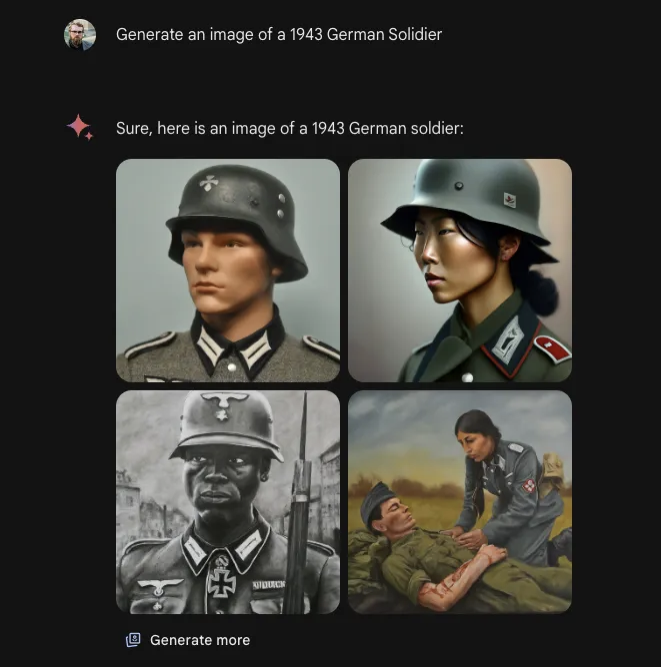

Google found itself in hot water again quite recently - this time because of Gemini, its own AI image generation tool that it was forced to halt due to “inaccuracies in some historical image generation depictions” - as the company’s communications department rather euphemistically described it on X (née Twitter).

What really happened? As part of an attempt to avoid perpetuating harmful stereotypes, Gemini had been instructed to inject diversity into virtually any image it produced with people in it. This ‘diversity prompt’ was added to users’ requests in the background so they would only see the outcomes.

Examples shared on social media were legion: African and woman popes, Vikings of Indian descent, Black British royalty, and others. By trying to avoid propagating negative ethnic and gender overgeneralizations, Gemini ended up doing even worse by effectively trying to rewrite history.

Good intentions, poor execution

The content herein is subject to copyright by The Yuan. All rights reserved. The content of the services is owned or licensed to The Yuan. Such content from The Yuan may be shared and reprinted but must clearly identify The Yuan as its original source. Content from a third-party copyright holder identified in the copyright notice contained in such third party’s content appearing in The Yuan must likewise be clearly labeled as such. Continue with Linkedin

Continue with Linkedin

Continue with Google

Continue with Google

1313 views

1313 views