BELMONT, MASSACHUSETTS - The emergence in the 1990s of a new global computer network that eased the creation, sharing and use of data - and fueled its incredibly fast growth - brought on the current triumph of the connectionist school of artificial intelligence (AI) over symbolic AI and other competing machine learning (ML) methods. Learning from examples or learning from data became the old-new focus of developing thinking machines.

Big Data, big processing

The internet spawned new engineering obstacles to managing and mining very large volumes of data stored in a miscellany of formats. Spam detection, offering recommendations, inventory prediction, searches for information, and analysis of social networks all required computer programs able to quickly sift data, spot hidden patterns, and perform desired actions.

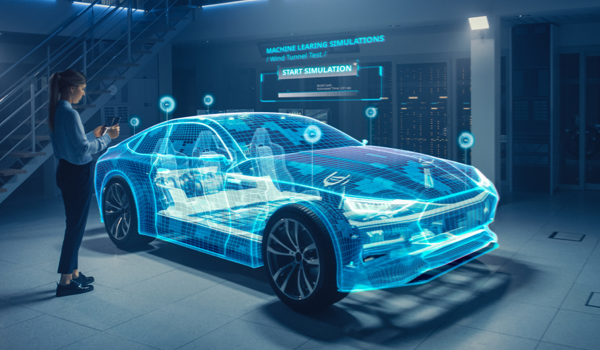

Artificial neural networks - now promoted as ‘deep learning’ (DL) - held a key advantage, ‘automated feature extraction.’ Other competing ML methods required considerable engineering skill and domain expertise to manually design models that determine the key characteristics of the desired output, while deep learning automatically learns these features from the data.

This meant it no longer mattered how ‘logical’ DL was, nor whether its proponents were a bunch of ‘romantics.’ Neither was whatever happened inside the ‘black box’ of consequence anymore, either. Instead, the real-world result became the touchstone, i.e., whether the program did as expected, and did It better than other methods of learning from data. Capturing the complexity of the world as reflected in data and overcoming noise and redundancy within them became of the utmost import. In this new world characterized by ‘the unreasonable effectiveness of data,’ the most efficacious method was deep learning.

The momentum driving DL has been b

The content herein is subject to copyright by The Yuan. All rights reserved. The content of the services is owned or licensed to The Yuan. Such content from The Yuan may be shared and reprinted but must clearly identify The Yuan as its original source. Content from a third-party copyright holder identified in the copyright notice contained in such third party’s content appearing in The Yuan must likewise be clearly labeled as such. Continue with Linkedin

Continue with Linkedin

Continue with Google

Continue with Google

862 views

862 views