BERLIN -

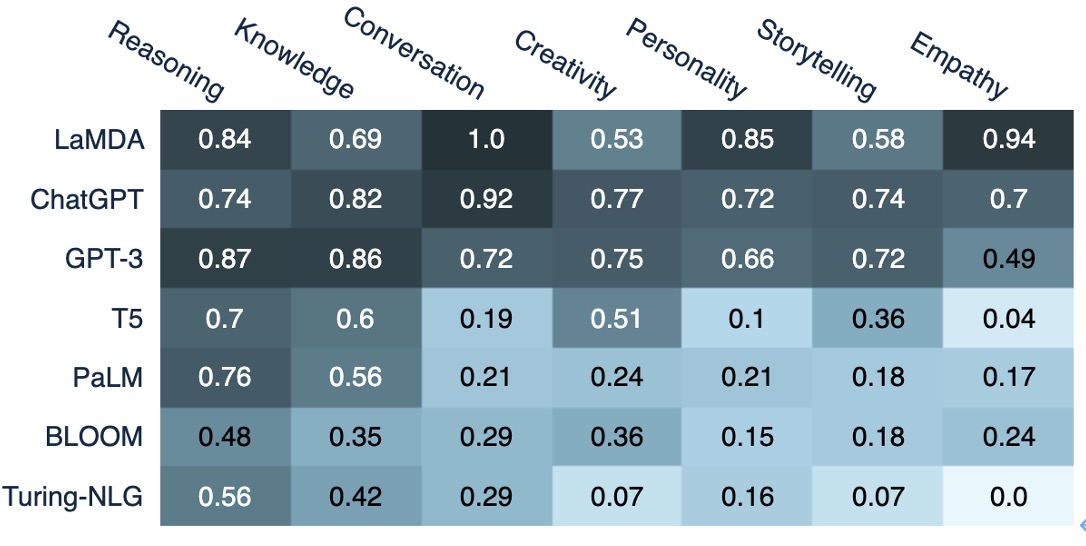

How popular LLMs score along human cognitive skills (Source: semantic embedding analysis of ca. 400k AI-related online texts since 2021)

Disclaimers: This article was written without the support of ChatGPT. Also, all images are by the author unless otherwise noted.

In the last couple of years, large language models (LLMs) such as ChatGPT, T5 and LaMDA have demonstrated an amazing ability to produce human-like language. Casual observers are quick to attribute intelligence to models and algorithms, but how much of this is emulation, and how much is actually reminiscent of the rich language capability of humans?

When confronted with the natural-sounding, confident outputs of these models, it is sometimes easy to forget that language per se is only the tip of the communication iceberg. Its full power unfolds in combination with a wide range of complex cognitive skills relating to perception, reasoning, and communication. While humans acquire these skills naturally from the surrounding world as they grow, the learning inputs and signals for LLMs are rather meager. They are forced to learn only from the surface form of language, and their success criterion is not communicative efficiency but the reproduction of high-probability linguistic patterns. In the business context, giving too much power to an LLM can lead to bad surprises. Facing its own limitations, an LLM will not admit to them. Instead, it will gravitate to the other extreme - producing nonsense, toxic content, or even dangerous advice, and with a high level of confidence. A medical virtual assistant driven by GPT-3 might, at a certain point in the conversation, even advise its users to kill themselves.

The content herein is subject to copyright by The Yuan. All rights reserved. The content of the services is owned or licensed to The Yuan. Such content from The Yuan may be shared and reprinted but must clearly identify The Yuan as its original source. Content from a third-party copyright holder identified in the copyright notice contained in such third party’s content appearing in The Yuan must likewise be clearly labeled as such. Continue with Linkedin

Continue with Linkedin

Continue with Google

Continue with Google

1454 views

1454 views

2023-05-06