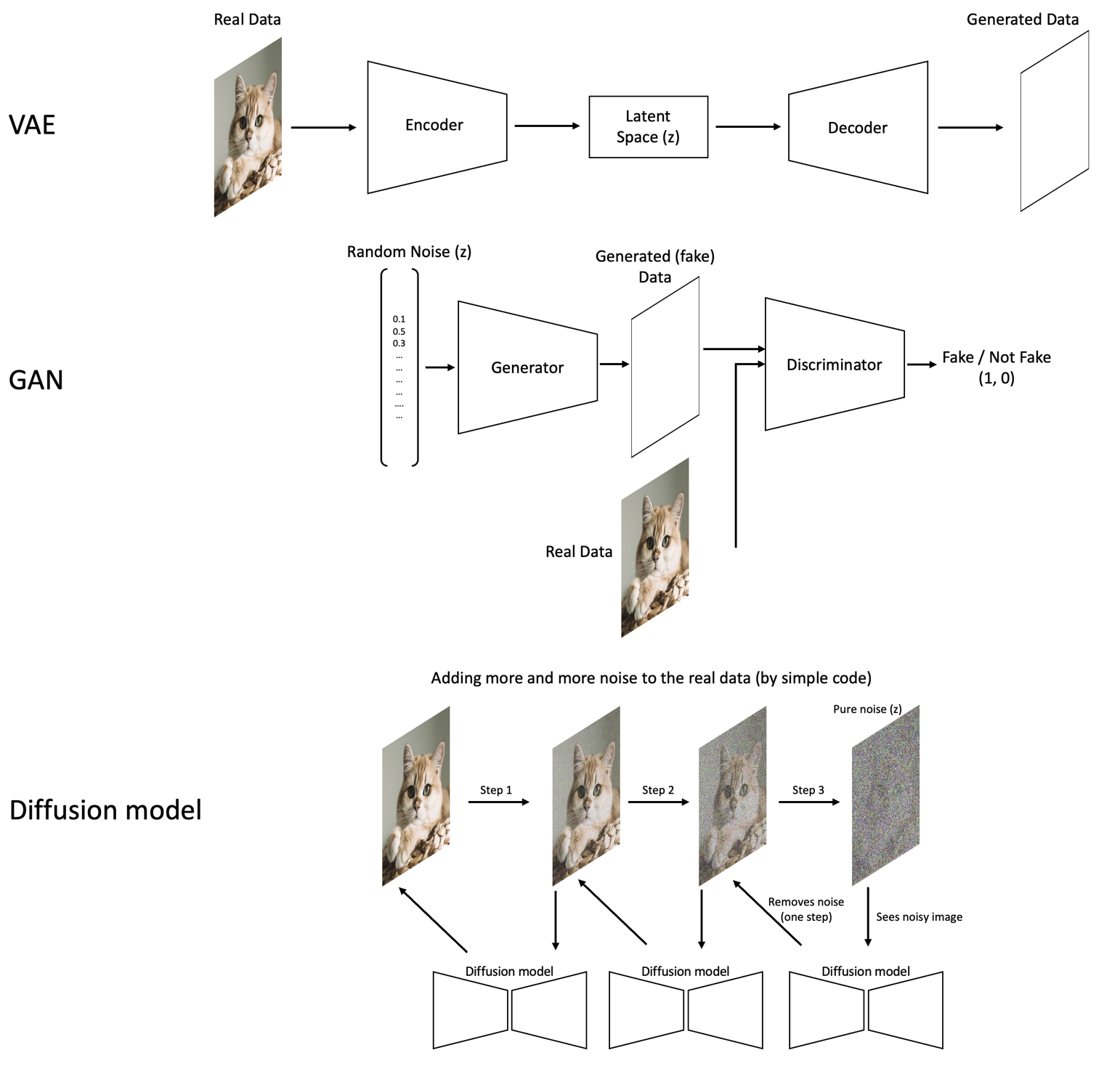

TEHRAN - Generative models learn data distributions, allowing them to generate similar data samples after training. Until recently, variational autoencoders (VAEs) and generative adversarial networks (GAN) were two favorite approaches for building generative models. However, diffusion models have recently taken their place and outperformed them in almost every application. VAEs are encoder-decoder models that learn a latent space in which the input data is encoded. They can then sample from that distribution to generate new data samples using their decoder.

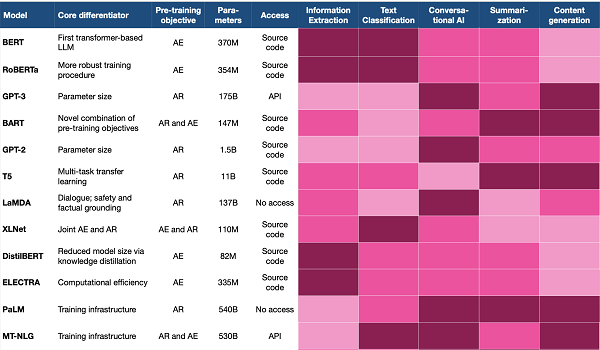

Different families of generative models in deep learning

Figure 1 | VAE vs GAN vs Diffusion models | Image by the author

GANs consist of two networks trained simultaneously: a generator and a discriminator. The generator tries to turn random noise into new data samples and fool the discriminator into believing these synthesized samples are real, while the discriminator sees both real and generated samples during training and learns to distinguish them - without being fooled by the generator - as a binary classifier. To train diffusion models, random Gaussian noise is added in multiple steps to each image in the training set until that image is no longer recognizable and simply becomes pure noise. Up until this point, no learning occurs, and this process is done using simple code. Here, the diffusion model must learn to denoise these images and recover the main image.

This denoising is also done in multiple steps, with each removing a part of the artificial noise added before. By so doing, the model gets a sense of the training data distribution and different noise distributions

The content herein is subject to copyright by The Yuan. All rights reserved. The content of the services is owned or licensed to The Yuan. Such content from The Yuan may be shared and reprinted but must clearly identify The Yuan as its original source. Content from a third-party copyright holder identified in the copyright notice contained in such third party’s content appearing in The Yuan must likewise be clearly labeled as such. Continue with Linkedin

Continue with Linkedin

Continue with Google

Continue with Google

1514 views

1514 views