SEVILLE, SPAIN - “Should clinicians make a leap of faith and follow suit whatever recommendation is given by an AI model without questioning anything? No, better explain to them why the AI reached that conclusion.”

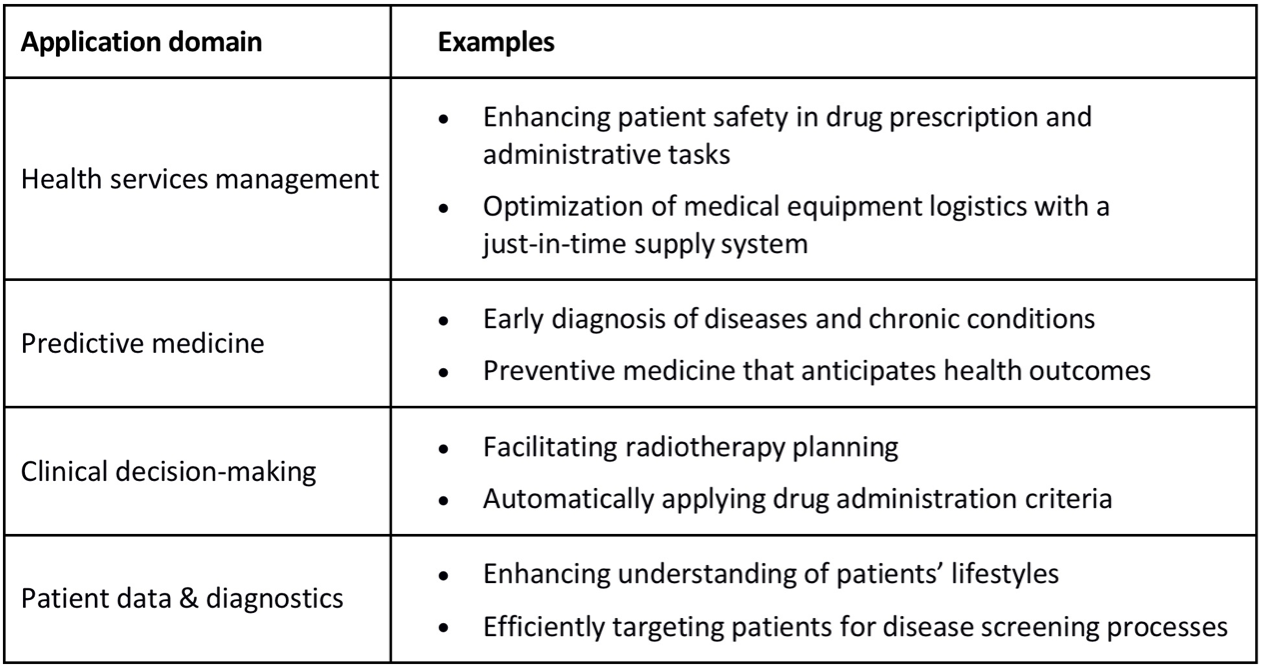

Up until now, tested artificial intelligence (AI) applications in healthcare have mainly related to four major topics: 1) health services management, which allows healthcare professionals to work more efficiently, 2) predictive medicine, which supports diagnostic, treatment and predicting outcomes in clinical settings, 3) clinical decision-making, which facilitates better clinical decisions (or even replaces human judgment) in specific areas, and 4) patient data and diagnostics, which support the management of vast amounts of clinical data generated by patients and their interactions with the health system.1

The latest experiences have proven the excellent performances achieved by AI applications in specific yet complex tasks, improving their accuracy at the same pace as their complexity grows. However, there is a downside in this progressing trend, as it turns out that the best performing AI algorithms are also those that are the most difficult for humans to interpret.

Therefore, in order to provide clinicians with the best technological tools available to facilitate their work, should they make a leap of faith and follow suit without questioning anything, regardless of whatever recommendation is given by an AI model? Obviously, the answer to this question is no. Medicine is a highly sensitive field in which decisions made can often mean the difference between a patient’s life and death. In this scenario, no one should fully trust what cannot be understood. Besides, most - if not all - medical doctors around the world have been trained under the evidence-based medic

The content herein is subject to copyright by The Yuan. All rights reserved. The content of the services is owned or licensed to The Yuan. Such content from The Yuan may be shared and reprinted but must clearly identify The Yuan as its original source. Content from a third-party copyright holder identified in the copyright notice contained in such third party’s content appearing in The Yuan must likewise be clearly labeled as such. Continue with Linkedin

Continue with Linkedin

Continue with Google

Continue with Google

957 views

957 views